LSTM or Transformer as "malware packer"

Hiding Malicious Code in AI Models

The idea of using AI models to hide malicious code is not merely theoretical – there are already studies proving its practical feasibility. One example is the EvilModel technique presented in 2021, which involves embedding malware directly into the parameters of a neural network.

Experiments demonstrated impressive capabilities of this method. In the popular AlexNet model, sized at 178 MB, researchers successfully embedded 36.9 MB of malicious code, accounting for approximately 20% of the network’s weights, while maintaining image classification accuracy loss below 1%. Even more extreme tests, where malware occupied up to 50% of the hidden layer neurons, resulted in only a slight drop in accuracy – the network retained 93.1% of its original performance.

Importantly, such models easily bypass antivirus scanners. A model with embedded malware passed undetected through 58 VirusTotal engines. This is because malware hidden in network parameters is dispersed and encoded in floating-point numbers, lacking the characteristic byte sequences recognized by traditional signature-based detection systems.

Technical Implementation

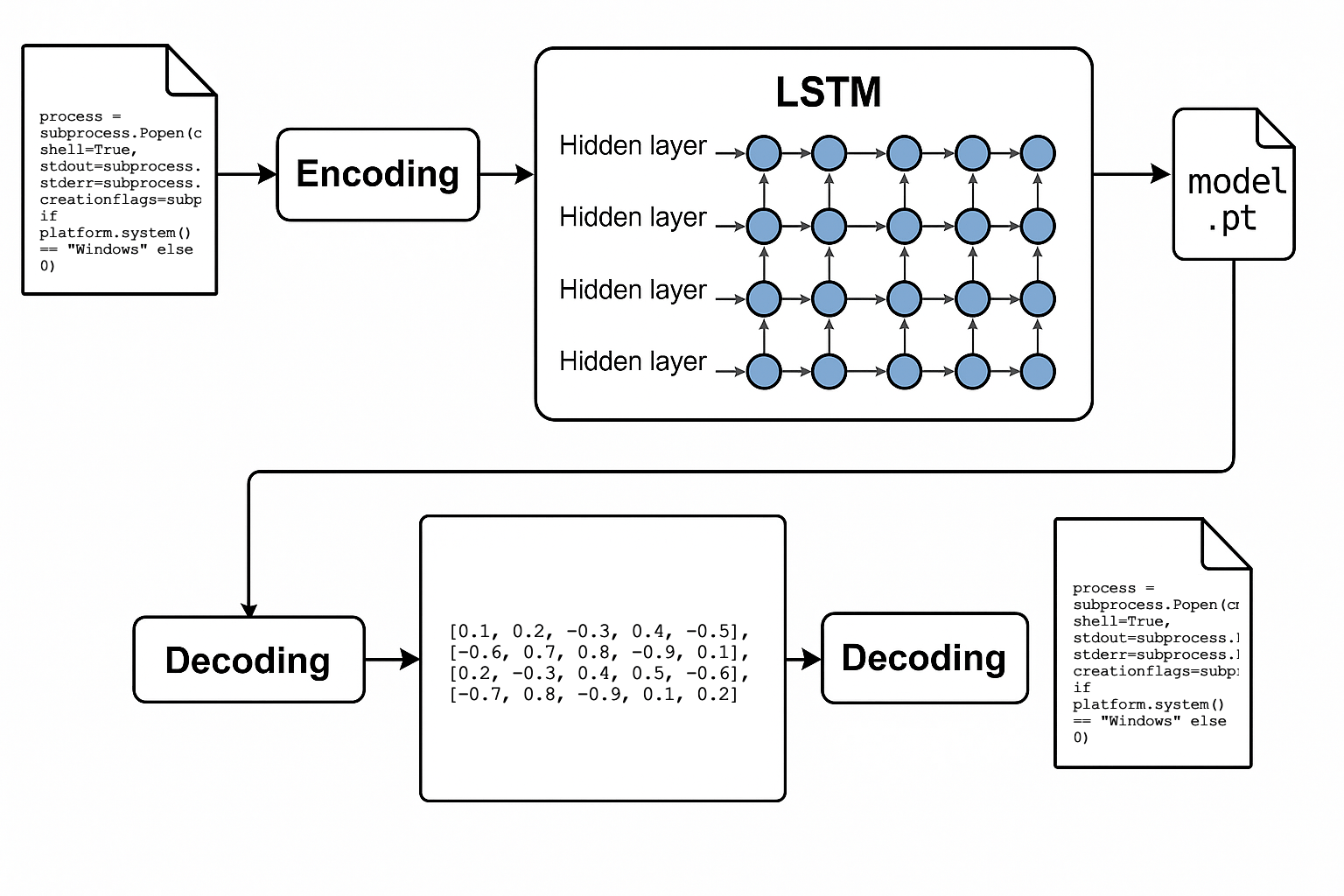

The prototype utilizes the following approach:

- Character Dictionary Building - the system creates a character-level dictionary along with a Beginning-of-Sequence (BOS) token

- Training for Memorization - the LSTM network is trained on a single file until complete memorization of its contents

- Generation from BOS Token - during inference, the model generates the complete character sequence starting from the BOS token

- Integrity Verification - SHA-256 checksums of the original and generated files are compared

Intentional Use of Overfitting Phenomenon

An alternative approach to EvilModel is packing an entire program’s code into a neural network by intentionally exploiting the overfitting phenomenon. I developed a prototype using PyTorch and an LSTM network, which is intensively trained on a single source file until it fully memorizes its contents. Prolonged training turns the network’s weights into a data container that can later be reconstructed.

The effectiveness of this technique was confirmed by generating code identical to the original, verified through SHA-256 checksum comparisons. Similar results can also be achieved using other models, such as GRU or Decoder-Only Transformers, showcasing the flexibility of this approach.

The advantage of this type of packer lies in the absence of typical behavioral patterns that could be recognized by traditional antivirus systems. Instead of conventional encryption and decryption operations, the “unpacking” process occurs as part of the neural network’s normal inference.

Challenges in Detection

A neural network as an unconventional packer is difficult to detect because, to most analytical tools, it appears as a standard AI computation library. Only at the network’s output does the ready-to-execute malicious code emerge, posing a significant challenge for typical antivirus heuristics.

Potentially suspicious behavior might involve writing the generated code to disk, but even this can be circumvented by using fileless malware techniques, where code is generated and executed directly in the process memory.

Leveraging AI Hardware Accelerators

One of the most intriguing aspects of using AI models to hide malware is the possibility of leveraging dedicated neural hardware accelerators found in many modern devices (e.g., Apple Neural Engine, NVIDIA GPUs with Tensor Cores).

The primary advantage of this approach is minimizing suspicious behavior visible at the CPU level, as intensive computations occur on specialized chips. Resource managers or security systems may not detect anything unusual, as the process appears to perform standard AI operations.

Moreover, typical dynamic analysis methods (sandboxing) are ineffective against computations performed on GPUs or NPUs, significantly complicating malware analysis. Process and resource monitoring systems often lack mechanisms to oversee ML library operations, providing malware with an additional layer of invisibility.

Technical Challenges for Attackers

Despite numerous advantages, this approach also involves certain technical challenges. Attackers must prepare models compatible with the victim’s hardware and potentially deliver appropriate model formats, requiring additional effort. However, modern frameworks enable model conversion across platforms, making this task feasible.

As a result, neural packers could become an attractive alternative to traditional malware hiding methods, akin to historical techniques of embedding code in GPU memory. The rapid development of AI-supporting hardware may soon turn this technique into a significant cybersecurity threat.

When expanding this prototype, remember to use the safetensors library to avoid security issues like “pickle attacks”. This is only a research project and is not meant for production use. Use it solely for educational and research purposes, not for creating malicious software.

Update

- 2025-06-29 15:05 I posted this article on Reddit (r/MachineLearning), where it received a lot of positive feedback. You can find the original thread here.

- 2025-07-01 19:40 See also my new article on the same topic, where I answer some questions raised in the comments on Reddit.

If you want to cite this article, you can use the following BibTeX entry:

@misc{bednarski2025lstm,

author = {Bednarski, Piotr Maciej},

title = {LSTM or Transformer as “malware packer”},

year={2025}, month={Jun},

url = {https://bednarskiwsieci.pl/en/blog/lstm-or-transformer-as-malware-packer/}

}